AUTODIDACT – Automated Video Data Annotation to Empower the ICU Cockpit Platform for Clinical Decision Support

Description

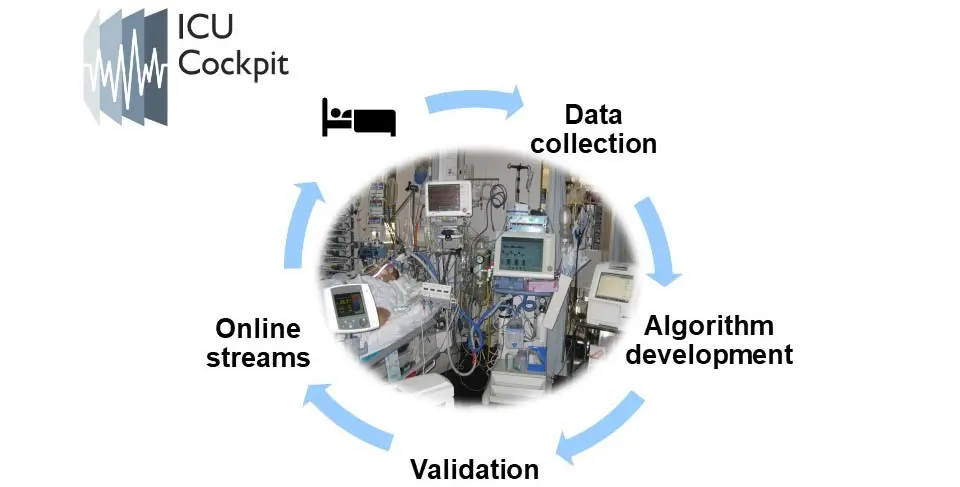

Monitoring diverse sensor signals of patients in intensive care can be key to detect potentially fatal emergencies. But in order to perform the monitoring automatically, the monitoring system has to know what is currently happening to the patient: if the patient is for example currently being moved by medical staff, this would explain a sudden peak in the heart rate and would thus not be a sign of an emergency. To create such annotations to the data automatically, the ZHAW group of Computer Vision, Perception and Cognition has teamed up with University Hospital Zurich’s Intensive Care Unit (ICU) under the lead of Prof. Emanuela Keller to equip the ICU Cockpit software (compare NRP 75 Research project) with video analysis capabilities: based on cameras in the patient room that deliver a constant, privacy-reserving video stream from the patient’s bed (i.e., no person can be identified based on the video resolution), the location of patient and medical staff shall be automatically detected and tracked to extract simple movement patterns. Based on these patterns, it shall be classified if and what medical intervention is currently performed on the patient. The research challenge in this project is to realize such a system without access to many labels, i.e., to learn the detection, tracking and classification in mainly un- and self-supervised ways.

Key Data

Projectlead

Dr. Gagan Narula

Co-Projectlead

Project team

Daniel Baumann, Prof. Dr. Emanuela Keller, Pascal Sager

Project partners

Universitätsspital Zürich / Institut für Intensivmedizin

Project status

completed, 02/2022 - 12/2022

Institute/Centre

Centre for Artificial Intelligence (CAI)

Funding partner

Kanton Zürich / Digitalisierungsinitiative DIZH (Rapid-Action-Call)

Project budget

143'000 CHF