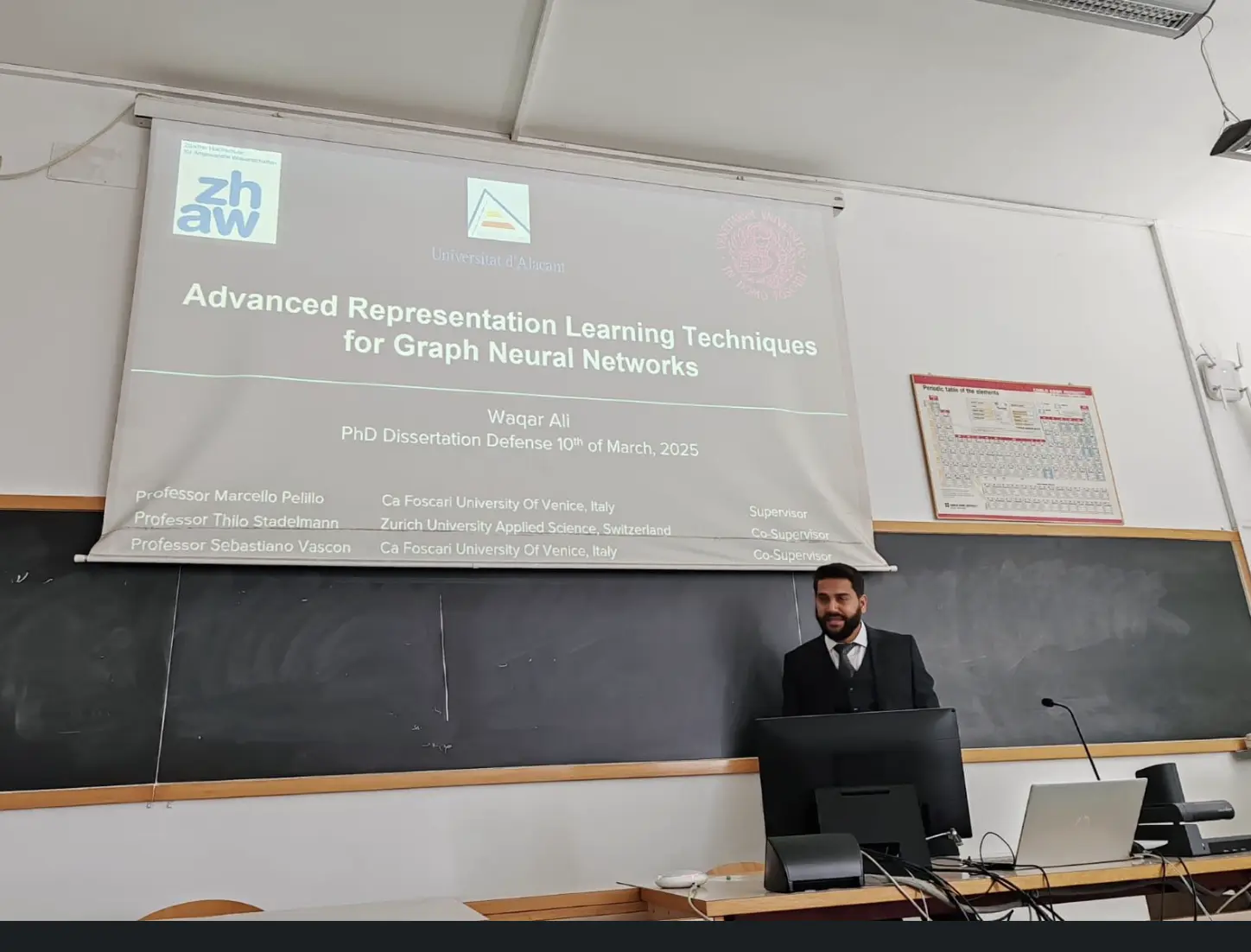

Waqar Ali successfully defended his PhD thesis on GNNs

Waqar Ali successfully defended his PhD thesis on "Advanced Representation Learning Techniques For Graph Neural Networks" at Ca'Foscari University of Venice. On behalf of the CAI, we extend our heartfelt congratulations to Waqar Ali!

Abstract: Over the past decade, deep learning models have become a key driver of modern artificial intelligence, enabling remarkable advancements in analyzing and interpreting complex data across various fields, such as computer vision, speech recognition, natural language processing, and beyond. These models are mainly developed for regular grid-structured data like sequences and images; however, not all forms of data conform to grid-like structures. A graph is a more general data structure that powerfully represents entities and their relationships, which is natural for fields such as social networks, biology, and chemistry.

Modern deep learning models face significant challenges when applied to graph data due to the inherent complexity and non-euclidean nature of these graphs. Recently, Graph Neural Networks (GNNs) have been developed as a powerful tool for capturing the intricate structures and relationships within graph data. In this thesis, we delve into the various aspects that can enhance the performance of GNNs for standard graph learning tasks (e.g., graph and node classification) by introducing advanced graph representation learning techniques such as graph pooling, graph augmentation, community-based message passing, and graph rewiring.

First, we focus on graph pooling, an essential GNN building block, and design three novel graph pooling methods for learning graph representations. These methods reduce graphs’ size and complexity while preserving essential structural information, enabling GNN models to achieve superior performance in graph classification tasks. Second, we propose advanced graph augmentation techniques to generate weakly labelled data samples, improving the generalization and robustness of GNN models. These techniques include the Node-Dropping Augmentation strategy, which selectively removes less important nodes based on their degree and a structure learning method to reconnect isolated nodes by learning attention-based relationships.

Third, we develop an advanced message passing framework designed to address the challenges of heterophilic graphs, where connected nodes may have dissimilar features or labels. This improved message passing method enhances the GNN ability to capture diverse node attributes, leading to more accurate node classification outcomes.

Lastly, we present a novel graph rewiring method to overcome the over-squashing problem in GNN architectures. This method strategically adds edges to ensure effective communication and information flow within the graph, preserving long-range dependencies and improving overall model performance.