Human-Factors-Engineering Laboratory

Laboratory Description

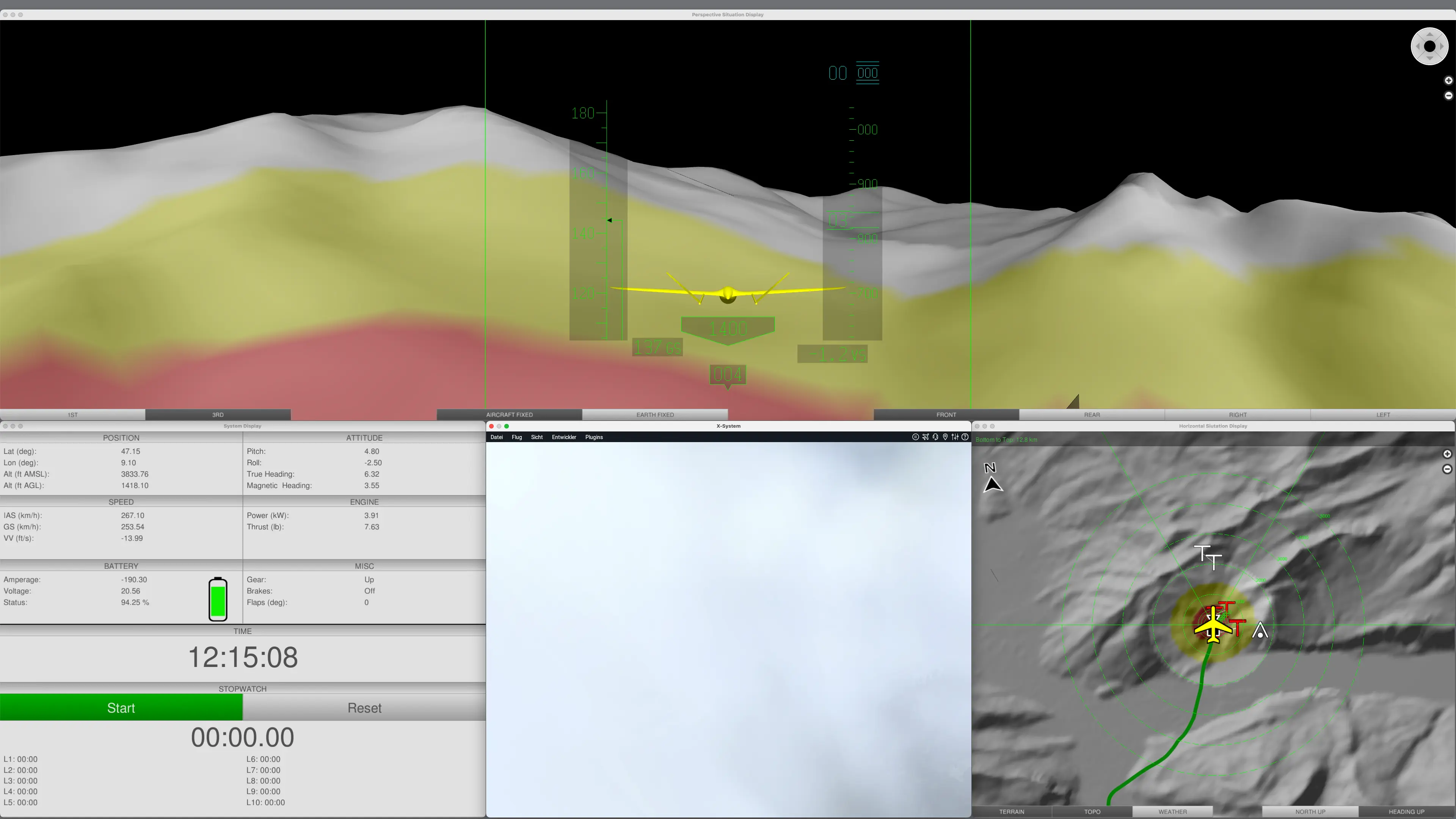

Remote Pilot Station: Remote Pilot Station with a panoramic screen

Unmanned aircraft are to be gradually integrated into the European air traffic system. In addition to the aircraft, a ground station is one of the core components of such Remotely Piloted Aircraft Systems (RPAS). For safe flight operations, the ground station (Remote Pilot Station, RPS) must ensure effective and efficient co-operation between man and machine. In particular, the RPS must be designed in such a way that the remote pilot's situational awareness is always guaranteed.

The ZHAW Centre for Aviation has developed a prototype ground station with a large-format panoramic screen for this purpose. A large, high-resolution panoramic screen (4K Ultra High-Definition Display) with a screen diagonal of 140 centimetres (55 inches) is used as the display medium.

In manned aviation, cockpit displays with synthetic vision (SV) or enhanced vision (EV) have become established. The visual display reduces cognitive strain as it largely eliminates the need to mentally integrate one- and two-dimensional information. It thus effectively prevents a loss of spatial situational awareness.

Based on such cockpit displays, three original display formats were defined and implemented for the panorama screen of the ground station: Perspective Situation Display (PSD), Horizontal Situation Display (HSD) and System Situation Display (SSD). The sensor view of the on-board camera forms a fourth display that supplements the synthetic view in PSD and HSD.

The Perspective Situation Display (PSD) offers the drone pilot a synthetic view that differs significantly from a corresponding cockpit display. The field of view in the PSD covers 180° horizontally and 60° vertically. The synthetic view takes into account the dislocated workplace of a remote pilot. He can choose between different egocentric and exocentric perspectives. This allows him to adapt the display to the situation and flight task.

Cockpit Simulator:

The human factors engineering laboratory has its own cockpit simulator, which is currently designed as a one-person cockpit (single pilot operation). The simulator serves as a test platform for augmented reality head-mounted displays such as the Microsoft HoloLens or the Apple Vision Pro. The use of speech to control safety-critical on-board systems is also being investigated (Speech Enabled Cockpit).

Applications

- Practical training of students as part of the Human Factors Engineering course module, in particular practical design and evaluation of human-machine interfaces at the RPS.

- Development and analysis of operating and display concepts for the control of unmanned aerial vehicles without direct visual contact (BVLOS - Beyond Visual Line of Sight) at the RPS and the mRPS.

- Development and analysis of operating and display concepts for voice control of aircraft (Speech Enabled Cockpit) in the cockpit simulator.