Machine Learning in Visual Computing

Computer Vision and Machine Learning are at the heart of the digital revolution, transforming how we interact with technology and the world around us. We are dedicated to advancing these dynamic fields through comprehensive education, research, and practical applications.

Teaching Machines how to See

Our research initiatives push the boundaries of what's possible. Collaborate with esteemed faculty on projects in automated image interpretation, machine learning, visual communication, and more. Our work doesn't just stay in the lab – it has real-world implications, influencing everything from healthcare diagnostics to art.

Teaching Students how to Teach Machines how to See. Together with the Institute of Computer Science we offer basic and advanced courses on Visual Computing.

Visual Interestingness

Interestingness -- the power of attracting or holding one's attention.

Our daily life is greatly influenced by what we consume and see. On one hand, we decide based on our personal interests which news, movies, or other events we focus our attention on. On the other hand, most people are also very open to external visual stimuli that could influence their behavior. To learn more about human visual perception and its effects on judging events as interesting, but also for commercial purposes, it is of great interest to understand what triggers human attention and interest.

For instance, models of what people consider 'interesting' could be used to automatically analyze video streams in video surveillance applications and alert users. Or it can help people in their work by automatically highlighting 'interesting' facts that might otherwise have been overlooked. This is especially important in time-critical scenarios where someone needs to quickly get an overview of many facts, such as in medical emergency care.

- F. Abdullahu and H. Grabner, Commonly Interesting Images, In Proc. European Conference on Computer Vision (ECCV), 2024, Data and Code on GitHub

- T. Koller, and H. Grabner, Who wants to be a click-millionaire? On the influence of thumbnails and captions. In Proc. IEEE International Conference on Pattern Recognition (ICPR), 2022

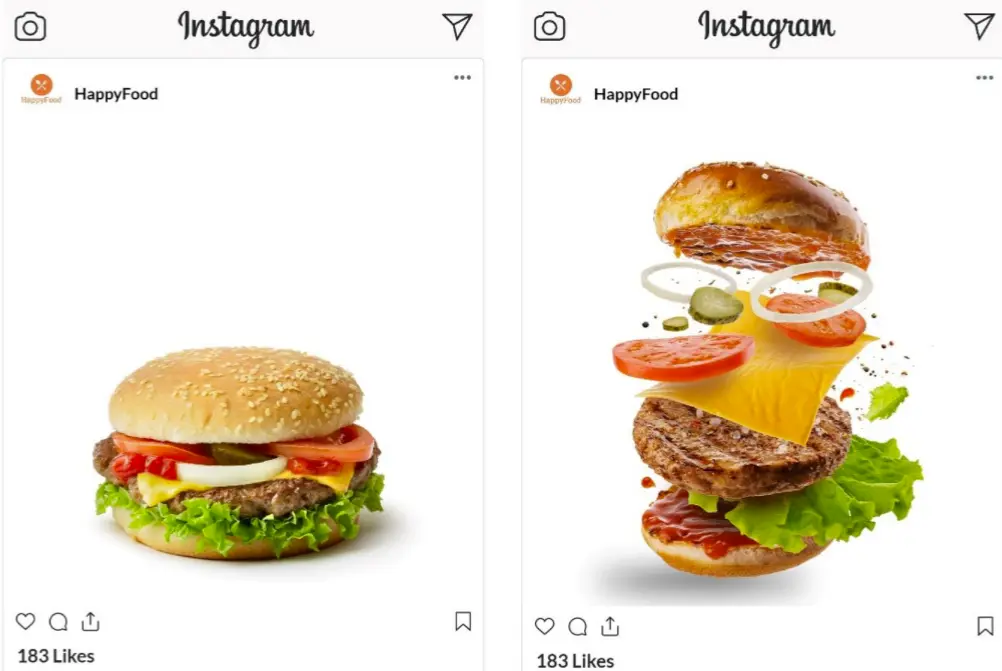

The Effect of Implied Motion

Over the past several decades, visual imagery has become the dominant element in modern advertising. A common content strategy involves depicting humans, animals, or objects in the midst of motion. Whereas previous research indicates that implied motion images enhance persuasion, it is unclear whether this effect is unique to depictions of moving humans or if it also applies to depictions of moving animals (e.g., a dolphin jumping out of the water) and moving objects (e.g., a car driving on a street, a burger being tossed in the air). Across a set of seven experimental studies, we provide robust evidence that images depicting animate and inanimate motion increase the persuasiveness of an advertisement and that this effect occurs through enhanced engagement. Our findings further indicate that the level of engagement is influenced by the complexity of the depicted motion, with more complex, nonlinear movements eliciting greater engagement than simpler, linear movements. Overall, this research contributes to the advertising literature by providing an empirically grounded account of implied motion imagery and by helping marketers create more effective advertising.

- ZHAW Medienlinguistik

- F. Bünzli, W. Weber, F. Abdullahu, and H. Grabner, Depicting Humans, Animals, and Objects in Motion: The Effect of Implied Motion on Engagement and Persuasion in Advertising? Journal of Advertising, 2024

- F. Bünzli, W. Weber, F Abdullahu, and H. Grabner, Do Vectors of Motion Make Advertisements More Interesting? Annual Conference of the International Communication Association (ICA), 2023

Watching the World

All images are equal but some images are more equal than others.

"WATCHING THE WORLD, The Encyclopedia Of the Now" is an art, photography, exhibition, AI, big data, and online project that exclusively uses open data sources. It photographs the world simultaneously in live mode around the clock and across the globe using publicly accessible webcams, presenting these recordings in real-time on the website in various modes and developing a new way of seeing, a new form of photography with the help of AI.

On the webpage https://webcamaze.engineering.zhaw.ch, more than 10,000 webcams are analyzed in real time. If the images were printed out, they would stack up to the height of the Great Pyramid of Giza – every day! Without methods of machine learning and automatic image processing, such volumes could no longer be managed.

Most of the time, there is nothing "interesting" to see – but if you are at the right place at the right time, you see surprising, unexpected, bizarre, perhaps questionable images that invite reflection and discussion.

"SPY BOT 2000 - We believe the version of the arty bollocks write up is that this loads live webcam images from around the world and gets an AI to sort them into categories. Actually quite an interesting use of AI for a change, if a little creepy." -- b3ta.com

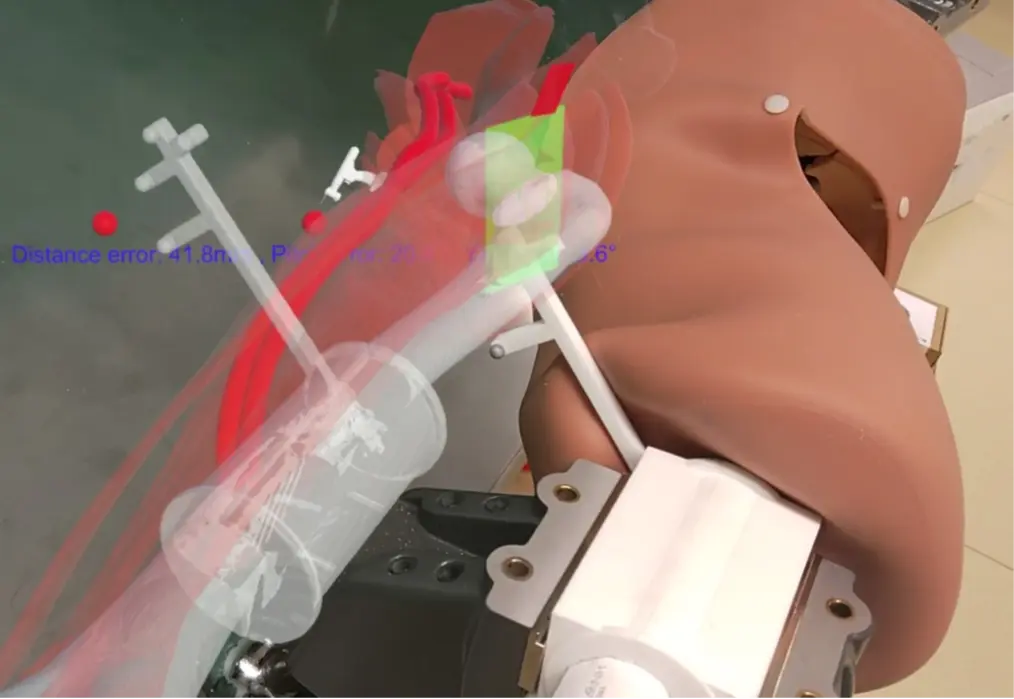

Surgical Proficiency

integrates adaptive AR guidance and AI techniques. By harnessing the power of intuitive AR instructions and leveraging AI-driven analysis of human activity and behavior, our aim is to optimize the usability of surgical training simulators.

It initiates the paradigm shift in surgical training for optimally prepared surgeons and even greater patient safety.

Training on patients according to the principle of “see one, do one and teach one” no longer corresponds to today’s requirements and technical opportunities regarding education of surgical residents. During the Covid-19 pandemic, most hands-on training had to be discontinued, leading to an almost complete interruption of surgical education. Under the lead of the three clinical partners Kantonsspital St.Gallen, Centre Hospitalier Universitaire Vaudois, and Balgrist University Hospital, endorsed by the Swiss Surgical Societies, novel standardized and proficiency-based surgical training curricula are defined and interfaced to simulation tools. The four implementation partners VirtaMed AG, Microsoft Mixed Reality & AI Lab Zurich, OramaVR SA and Atracsys LLC, in collaboration with ETHZ and ZHAW develop these innovative training tools ranging from online virtual reality simulation, augmented box trainers, high-end simulators, to augmented-reality-enabled open surgery and immersive remote operation room participation. The proposed developments introduce a fully novel, integrative training paradigm installed and demonstrated on two example surgical modalities, laparoscopy and arthroscopy, while fully generalizable to other interventions. This will set new standards both in Switzerland and abroad.

- L. Wu, M. Seibold, N. Cavalcanti, J. Hein, T. Gerth, R. Lekar, A. Hoch, L. Vlachopoulos, H. Grabner, P. Zingg, M. Farshad, and P. Fürnstahl, A novel augmented reality-based simulator for enhancing orthopedic surgical training. Computers in Biology and Medicine, Volume 185, 2025

- https://www.surgicalproficiency.ch

- ZHAW Impact

- OR-X, ROCS Balgrist

- ETH Computer Vision and Learning Group

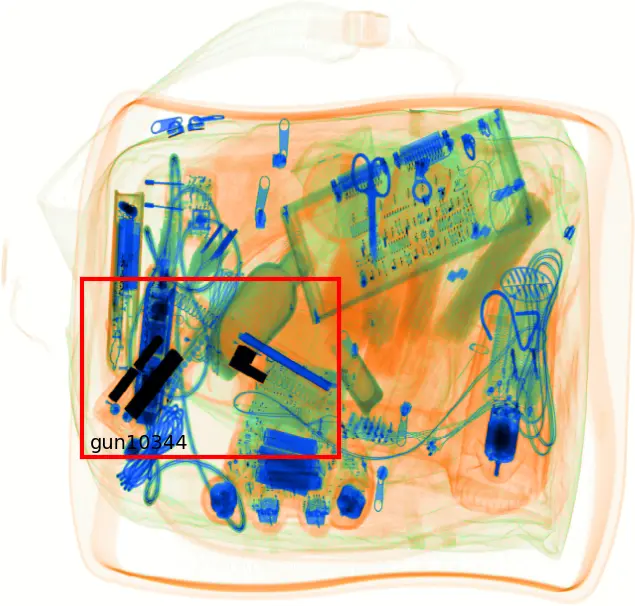

Target Recognition using Artificial Intelligence

X-ray images at airports are either checked manually or analyzed using object detectors. Pistols, pistol parts, ammunition and knives are recognized. Commercial systems are closed source systems and operate opaquely to the end user. Manufacturers of AI systems advertise high hit rates and low false alarm rates. However, these numbers cannot be interpreted meaningfully unless something it is known which methods and data were used to evaluate the systems.

An independent and scientifically based evaluation of the systems important. This project is a collaboration between Casra, FHNW and the ZHAW. The FHNW examines the project from a psychological point of view. In particular, human-machine interaction is analyzed. Casra has the project lead and creates X-ray images for training and testing. Our part is to create, train and test different kind of state-of-the art object detectors. The goal is to identify the strengths and weaknesses of the entire systems. This is intended to create a proposal for certification of commercial systems. Is it possible to certify object detectors separately from X-ray machines?

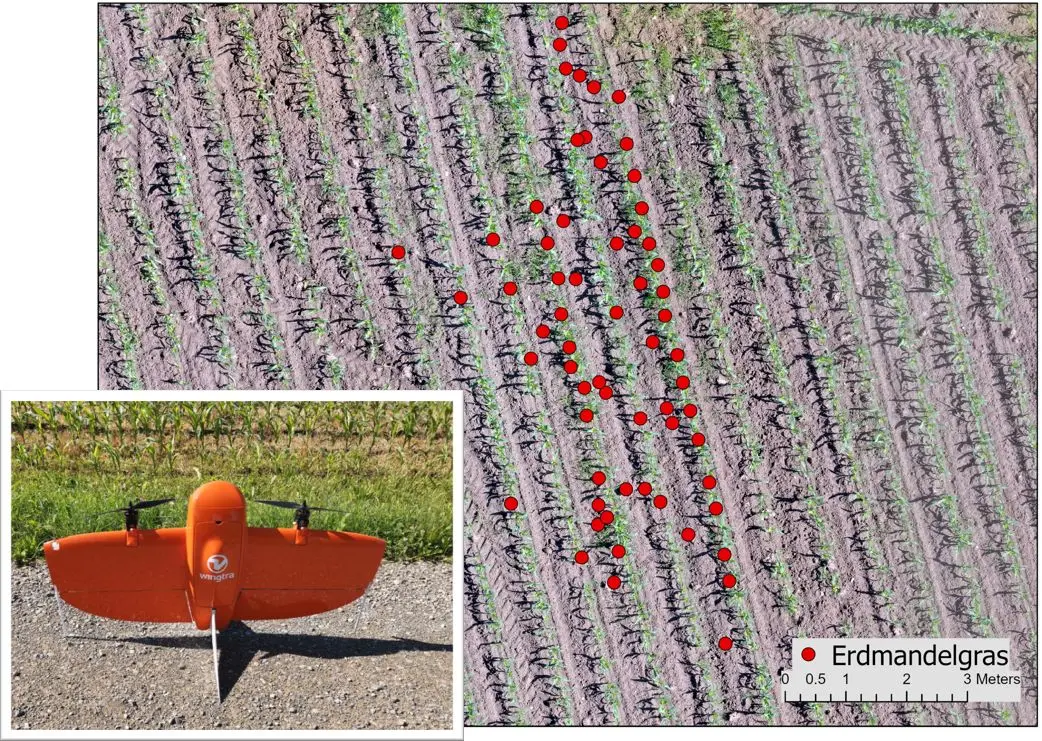

Detection of Yellow Nutsedge with Drones and Algorithms

Yellow nutsedge (Erdmandelgras, Cyperus esculentus) is an invasive neophyte that causes great damage to agriculture in Switzerland. The earlier the yellow nutsedge is detected, the easier it is to remediate affected fields. There is currently no organized monitoring; the weed is typically discovered by chance. The aim of this project is to use drone images and computer vision methods to detect yellow nutsedge from the air. The project partners are the Institute of Natural Resource Sciences (Geoinformatics Research Group) and Agroscope. The aerial images of various infested fields with different crops are enriched with geocoordinates and manually annotated by experts. The produced images are utilized for training a cutting-edge deep neural network, enabling it to identify and pinpoint yellow nutsedge in new, unseen images, which are then mapped onto an overview orthophoto

- ZHAW Geoinformatics Research Group

- M. Keller, J. Junghardt, H. Grabner, R. Total. Mit Drohnen und Algorithmen Erdmandelgras aufspüren. Gemüsebau Info, 18, 2022, 2

Team

“Great things in business are never done by one person, they are done by a team of people”

― Steve Jobs.